Concept UI Design

Designing user interface for a sci-fi gadget keeping in mind user needs and the tasks performed using it

Takeaway: Designing for AR is all about feeling the design!

Challenge: Storytelling. How to simplify the fiction and explain the purpose of gadget, it’s use and interaction?

About: This project was a deliverable for one of my core course, HCC-613 | User Interface Design and Implementation. The challenge was to select a Sci-Fi device and design a UI for it. There should be a clear demonstration of how the user would interact with the device.

Role: UX + UI Designer

Methods used: User Research, Task Analysis, Prototyping, UI design, Storytelling, Video making, Designing for AR

Tools: iMovie. InVision, Adobe After Effects

Duration: Research- 2 weeks, Prototype- 3 weeks

Overview

Brief:

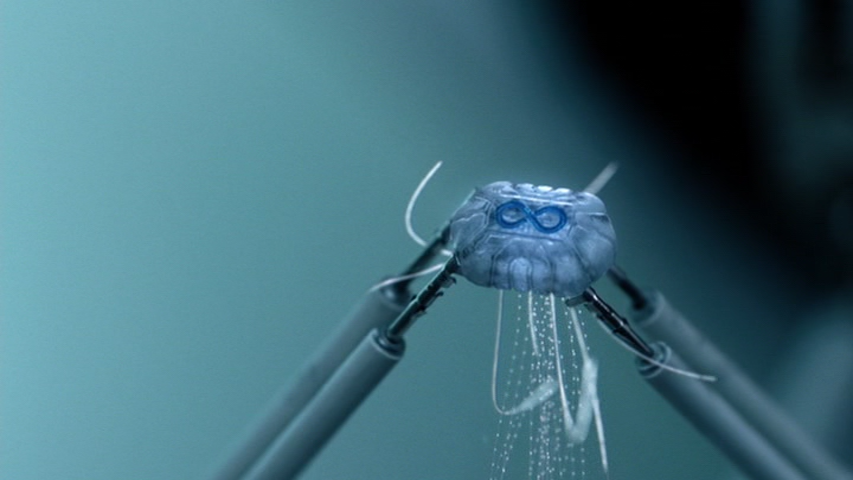

I choose the fiction drama series, The 100 as my reference. The technology is called A.L.I.E. (Applied Lucent Intelligence Emulator) which is a sentimental AI whose core objective is to make life better for mankind. This AI resides on a cybernetic implant which directly connects with the brain stem of the user. Once the user wears this chip, the user’s brain enters a dimension completely different virtual dimension called “The City of Light”. User’s physical body is at rest in the same dimension as before, but it’s the brain that is doing all the travelling!

My User and working of chip:

Clarke Griffin, the protagonist, is my user. The chip is to be inserted via neck and the physical body needs to be at rest while the chip is inside. In the series, the chip stores memories of all it’s past users and the current user can use these experiences from past to make better decisions in the present.

My Tweak:

I wanted a simple story line so it becomes easy for me to explain the scenes and the interactions. In my story, I will project A.L.I.E as a personal assistant helping my user to achieve her mission. The story goes like this: Clarke (protagonist) uses A.L.I.E (technology) to find Rebecca (antagonist), as she is planning a major attack on mankind. There is only one dimension in my story.

Challenges

The chip does not have any in built screen and it needs to be inserted in the body to function. Secondary challenge is depicting this design and interaction to a third person.

User Research

I strongly believe that research is the fundamental step for designing. Understanding the user, user needs, user expectations and problem areas is very important. For this project, I was familiar with the outline of the character since I followed the TV series. This helped me to quickly understand character sketch, needs and tasks.

User Data:

| Characteristics | Description |

|---|---|

| Name | Clarke Griffin |

| Gender | Female |

| Language | English |

| Personality | Fearless, Brave, Head strong, Determined, Intelligent, Leader, Loyal, Caring, Risk Taker, Emotional |

| Skills | Quick learner, Witty, Can fight |

| Interests | Drawing, Travelling, Learning new language |

| Physical Appearance | Height 5'4, Blonde, Pale complexion, Blue eyes, Round face |

| Usual Dressing | Long sleeve shirts with crochet cuffs, Jacket, Dark jeans, Boots |

| Negative Traits | Impulsive, Too emotional sometimes |

Empathy map

Hierarchical Task Analysis

Empathy Map:

They help to understand user feelings and how the user will behave in any given situation. By knowing about the feelings and thought process of the user, the designer can get an idea about the things that need to be kept in while designing. For example, if the user is a superhero and the device is a fighting suit, the designer has to make sure that the suit offers agility and is not claustrophobic for the user.

What I understood from my Empathy map: Clarke requires navigation, access to maps, be able to take pictures, remember locations, contact her friends and carry a weapon.

Task Analysis:

Hierarchical Task Analysis (HTA) is helpful in understanding the flow of tasks and its sub tasks, which tasks to prioritize and which tasks have options within them. By knowing the task flow, designer can design scenes and elements required by the user without missing any detail. While writing the HTA, I got a chance to re-iterate the entire task flow multiple times allowing me to understand it thoroughly.

Environment analysis:

Environment analysis helps to determine external factors that come into picture while the user is performing the tasks. This analysis describes factors like place, atmosphere, situations, physical strength, and so on.

For my scene: weather is moderately hot 70-65 degree F, Day time and Place is a quiet suspicious location - some suburban area.

Key points from user data:

All these techniques helped me to understand in depth about my character and device. Hierarchical Task Analysis was the most challenging but was equally important. I had to brainstorm before writing each task and its sub task which helped me to re-iterate the entire flow. This process made me visualize the actual working of the device and how will my character perform. Empathy maps helped me to gain insights into the user behavior and attitude. I had a user persona and an idea about background scene settings by the end of this task. It was now that I decided my draft for which functions should be included and the list is:

Navigation is required to travel to places. There should options which say ETA, remembering the location and map should be shown

Feature to call/ message a person. There should be an option to record the call if needed. Maintaining contact is very important for my character.

Feature to send pictures/videos. This would help her to make sure that the clues she is finding belong to the enemy

Feature to know the weather of that location and also another location. Since my character is travelling to unknown places in search of enemy, knowing the weather conditions would help her

Ideate and Design

After collecting all the user data and deriving design mandates from them, next step was to brain storm about ideas for putting everything in a screen. But the biggest question was how should I design the screen? Where would the display be? Since the device was using intangible technology designing a physical screen made no sense.

I followed multiple brain storming techniques to get to a solution. During this process, I tried Chain storming (re-iterating the process to get desired solution), Role storming (analyzing problem from a third person’s perspective), Gap filling (diving the user journey into smaller tasks) and the most effective method Mind mapping (sub dividing the tasks and jotting ideas to achieve them).

Information distribution | Screen design

Screen Ideas:

Position: I decided the display to be superimposed on vision. This display would be in Augmented Reality form

Input/Output: The device would talk with the user like a personal assistant. Input and output type would be speech, enabling the user to be aware of the feedback and know which processes are currently happening.

Screen area: As the device connects internally with the brain, the screen gets as much area as the eyes see. I decided to structure important information in the center and within the line sight.

Low fidelity screen designs:

I used the list of functions described above while designing the screens. I followed iterative process to make sure that I was considering all the user needs.

Medium Fidelity:

Now was the time to give more realistic touch to my idea! I had already ideated the screens, so I used them as my base and designed on it. I used Photoshop to create the screens and used InVision to link the screens. Thanks to Noun Project for providing amazing free icons!

The functions I’ve included are: Navigation Maps, Contact (call + message), Sending photo/video to any contact and Weather. Below I have attached few screens for reference, but they are in no specific order. The function they cater to is highlighted on the top right. Please click the link to see entire prototype: View Prototype

Home Screen

Navigation screen

Weather screen

Calling a friend screen

Clicking a picture

Message screen

Interactive Prototypes:

Navigation: This gif shows how the user can use the UI to get the map and get assistance for reaching the destination. The device is also capable of saving the location for future use

Call: This gif shows how the user can place a call. The device also offers a messaging feature whose input would be user’s voice

Click pictures: This gif explains how to select a particular object from the surroundings and click its picture. It also enables sharing with friends

Weather: This gif is to show how weather feature could be used. User can also change the location and know the weather status for a particular location.

Storytelling

This part is very crucial because if the viewer doesn’t understand the context, interface would not make any sense. I came up with a basic story line of how technology helps to defeat the evil. The data collected from user research was used to create my character, Clarke. The way she thinks and behaves was written on the bases of this data. While writing the task analysis, I had a vague story line in my mind which at this point was refined. I wrote multiple drafts and iterated the process to make sure I was not missing any important details. Defining the story helped me look at the picture closely, understand how exactly is the device being helpful, interaction with the device, dialogues and ending the scene.

Story Outline:

“Rebecca is the chair person for a very well known pharmaceutical company called “Big Pharma”. Big Pharma has been in the news recently for suspiciously smuggling unknown chemicals from abroad. FDA (Food and Drug Administration) receives a classified information about these chemicals to be lethal and generally used in manufacturing high level explosives. FDA hires Clarke, a private detective, to obtain more evidence and solve this case. Clarke is one of the very few detectives who have a track record of never losing the case. Clarke is known for the A.I. technology she has, called ALIE. ALIE is like her personal assistant who can understand like a human and work faster than a computer. ALIE is in the form of a device which Clarke embeds in her, and ALIE connects with Clarke’s brain stem. ALIE helps Clarke in every step she takes. After following the case closely for few months, Clarke gets a tip that Rebecca’s main motive behind hoarding these chemicals is to plan a genocide. Rebecca is going to execute the plan in 1 week from the day Clarke receives the tip. Clarke works very hard to get more evidence and exact details of the plan. Finally, Clarke’s hard work pays off and she gets some very promising clues that lead to Rebecca. Clarke along with ALIE sets out to put this plan to end and save the mankind!"

Final Video (Coming soon!)

Final fidelity prototypes were to be presented in a form of a video which would tell a story about how the users would use the device. The video would focus on explaining the interactions and working of the device. Currently I’m in the process of making some changes, it’ll be up very soon!

Things I kept in mind while designing:

Clearly differentiate between elements that are clickable and that are static

Keeping the UI clean and not cluttering the line of sight area

Prevent distractions. Only the objects, action feedback or information that is necessary should be included

Decide which elements should be 3D and which should be 2D. However, for this video I’ve implemented only 2D objects

Defining the environment for use, day/night.

Use right amount of light and shadow for elements.

Conclusion and Future Prospects

This project improved my design thinking skills many folds. I also got a hands on experience with InVision and Adobe After Effects. Although AR has many possibilities and there were many creative ideas for designing the interface, I wanted to come up with something which could be implemented now and not give futuristic options. I learned how to tell a story around my idea and put that into a design. This skill would help me visualize the interface and I could be aware of the short comings at an early stage.

Future prospects:

My initial idea was to use “Eye interaction”, since my device was very smart I thought it would be very creative to portray this interaction. However, depicting this interaction was challenging for me. I hope to find creative ways to implement this. I would also like to explore more into designing for safety. The device would give a forewarning if any mishap is about to happen. Another aspect which I did not talk about was charging the device. My best bet would be using the body’s kinetic energy as the body would be in motion which using the device. Lastly, making the device accessible for all!

References | Design inspirations:

https://blog.prototypr.io/6-problems-ui-ux-in-ar-7942af4a9118

https://www.smashingmagazine.com/2019/06/designing-ar-apps-guide/

https://the100.fandom.com/wiki/A.L.I.E.

https://thenounproject.com/

https://www.behance.net/gallery/54798681/Card-View-problems-UX-inAR

Google maps AR